Training GPT-4 would take centuries on an ordinary PC.

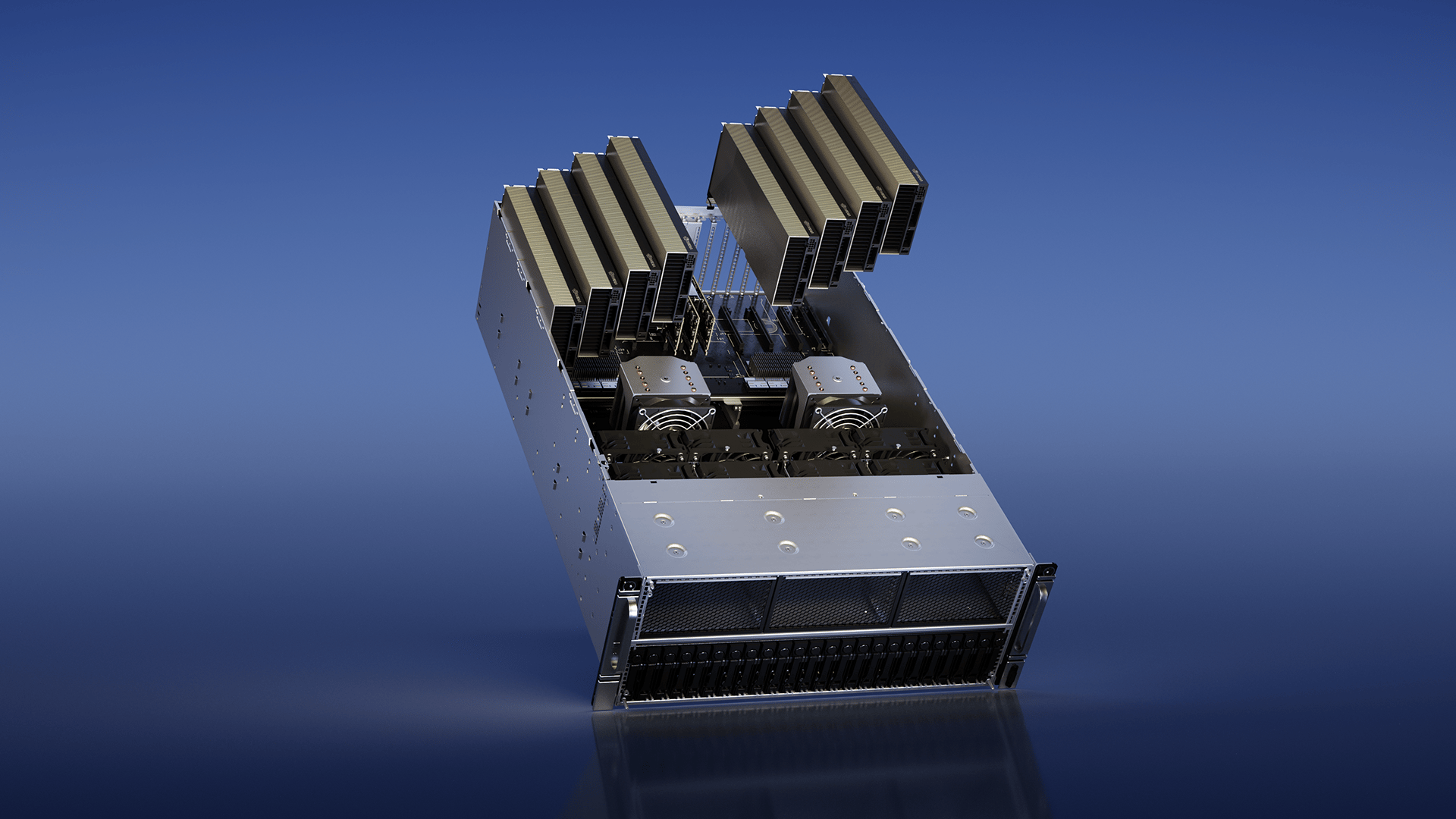

NVIDIA DGX System

Even running on thousands of NVIDIA GPU systems, costing billions and drawing enough power to run a small city, it took 90 days to train GPT-4 to talk.

NVIDIA doesn’t only make GPUs for your PC, they’re powering the future.

We often discuss OpenAI, but behind every GPT prompt lies a vast array of GPUs doing the heavy lifting, working while the rest of us are asleep. Right now, we should be discussing the powerhouse behind OpenAI.

Meet the Powerhouse of AI:

For many, NVIDIA remains the company that manufactures GPUs for ordinary PCs, the chip powering your Steam library or video editing setup.

However, the hardware behind GPT-4 and other AI models is on an entirely different level.

Your PC might be running on a $500 graphics card. GPT-4 is trained on NVIDIA H100 chips, each of which costs around $40,000.

And they’re not used one at a time.

These chips are bundled into machines called DGX servers (about $300,000 each).

Then they’re linked together to form SuperPODs, full-scale AI factories.

OpenAI reportedly utilized 25,000 H100s to train GPT-4, incurring over $1 billion in hardware costs alone.

The electricity bill is just as mind-blowing.

Training GPT-3 used an estimated 1.3 gigawatt-hours of electricity, enough to power hundreds of homes for a year.

GPT-4 likely burned through far more.

“We are at the iPhone moment of AI,”

Even the most valuable companies in AI today, OpenAI, DeepMind, Meta, and Amazon, are all competing for NVIDIA’s chips. Some are even trying to build their own just to keep up.

It All Matters — More Than You Realize:

NVIDIA has become the most critical company in the AI world, and perhaps even the entire tech industry.

At one point in 2025, it passed Apple as the world’s most valuable company. Why? Because:

Every major AI breakthrough, OpenAI, Meta’s LLaMA, Anthropic’s Claude, depends on NVIDIA’s chips

They’ve created a bottleneck: demand for GPUs is higher than supply

Everyone from startups to Amazon and Google is trying to build their own alternatives, but none have matched NVIDIA yet

“The most valuable real estate of the 21st century is no longer land—it’s GPU time.”

Even AI companies are renting GPUs from NVIDIA’s own AI cloud. It’s not just a chip company anymore; it’s becoming the AI powerhouse of the internet.

A monopoly, even.

The Next Leap:

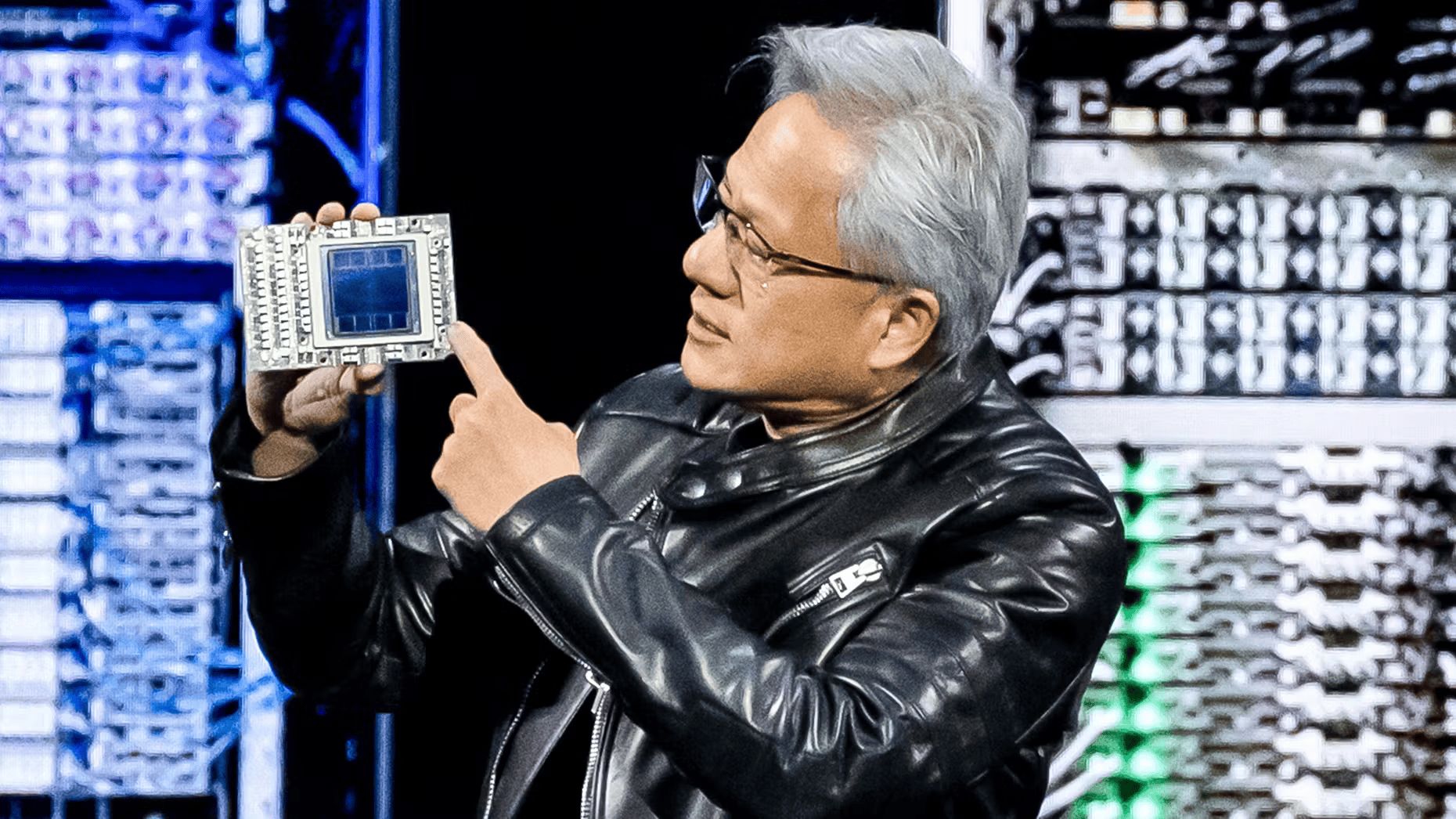

NVIDIA CEO Jenson Huang Unveiling Blackwell Chip

This year, NVIDIA is releasing a new line of chips called Blackwell. These aren’t just better—they’re designed for a new era of AI.

With:

4× faster model training

25× faster inference (response speed)

Considerably better energy efficiency

Support for models with 100 trillion parameters (GPT-4 is estimated to have ~1T)

…the leap isn’t just technical—it’s foundational.

Because here’s the bigger picture:

The faster we can train and run large models, the faster we can build more capable, more humanlike intelligence.

In other words:

NVIDIA is removing the speed bumps between us and AGI.

AGI, the point at which AI can perform most intellectual tasks as well as (or better than) humans, won’t be unlocked by one magical algorithm. It will come from:

Bigger models

Faster training

More experimentation

Cheaper, scalable infrastructure

And NVIDIA is enabling all four.

By lowering the cost of experimentation, NVIDIA gives researchers the freedom to try larger models, train longer, and iterate faster—things that would have been impossible just a few years ago.

“The most powerful AI systems will emerge from scale, given enough compute and data.”

It’s not just theory anymore.

OpenAI used 25,000 NVIDIA GPUs to train GPT-4

xAI and Meta are building their own supercomputers around NVIDIA chips

Cloud platforms like Azure and AWS now rent out entire GPU clusters

And as Blackwell chips arrive, and eventually, their successor, Rubin, we’ll see a future where:

AGI isn’t 30 years away—it’s something we may test in 5

The bottleneck isn’t ideas, but hardware

And NVIDIA becomes the Intel of the AI age, powering not laptops, but minds

The next ChatGPT breakthrough? The next humanlike assistant? The next AI scientist?

It will almost certainly be trained on NVIDIA.

We used to ask: “What can AI do?”

Now, the question is: “How fast can we build it?”

And that speed is, increasingly, measured in NVIDIA chips.